Most games are shit - this is a true fact with which there will be no arguing. And while we cannot really expect every game to be Portal level of quality, it is quite puzzling why average quality is so dismal. It's surely no lack of money - in spite of game piracy being so easy, people pay tens of billions of dollars for video games.

That's

a very interesting slideshow describing gaming industry. A first good hint of what's wrong with gaming can be seen from this costs breakdown:

So publishers, retailers, marketing, console vendors, and other parasites take almost all money, and just 16% of what customer pays goes into development cost. Why is it so?

Name recognition

The core of the problem is games being what economists call "

experience goods". No, it doesn't mean games are "experienced" - what is means is that

customers have no reliable way of telling if the game is any good before playing it (and paying for it). So what can customers do instead? Rely on any signals which correlate at all with quality - like being sequels to known good games, or being based on something well known from outside gaming. Let's leave games for a moment, do you know what the

50 highest grossing movies ever were (inflation etc. aside for now, it doesn't change that much)? Original content in bold (by with I mean content without major name recognition, I know Forest Gump was based on a book; Avatar on Pocahontas; and Titanic - well, on a Titanic - but in such cases name recognition didn't play a major role).

- Avatar

- Titanic

- The Lord of the Rings: The Return of the King

- Pirates of the Caribbean: Dead Man's Chest

- The Dark Knight

- Harry Potter and the Philosopher's Stone

- Pirates of the Caribbean: At World's End

- Harry Potter and the Order of the Phoenix

- Harry Potter and the Half-Blood Prince

- The Lord of the Rings: The Two Towers

- Star Wars Episode I: The Phantom Menace

- Shrek 2

- Jurassic Park

- Harry Potter and the Goblet of Fire

- Spider-Man 3

- Ice Age: Dawn of the Dinosaurs

- Harry Potter and the Chamber of Secrets

- The Lord of the Rings: The Fellowship of the Ring

- Finding Nemo

- Star Wars Episode III: Revenge of the Sith

- Transformers: Revenge of the Fallen

- Spider-Man

- Independence Day

- Shrek the Third

- Harry Potter and the Prisoner of Azkaban

- E.T. the Extra-Terrestrial

- Indiana Jones and the Kingdom of the Crystal Skull

- The Lion King

- Spider-Man 2

- Star Wars Episode IV: A New Hope

- 2012

- The Da Vinci Code

- The Chronicles of Narnia: The Lion, the Witch and the Wardrobe

- The Matrix Reloaded

- Up

- Transformers

- The Twilight Saga: New Moon

- Forrest Gump

- The Sixth Sense

- Ice Age: The Meltdown

- Pirates of the Caribbean: The Curse of the Black Pearl

- Star Wars Episode II: Attack of the Clones

- Kung Fu Panda

- The Incredibles

- Hancock

- Ratatouille

- The Lost World: Jurassic Park

- The Passion of the Christ

- Mamma Mia!

- Madagascar: Escape 2 Africa

So 34 out of top 50 (68%), or 16 out of top 20 (80%) are not original content. And most of the best movies ever are not on the list. And if you think about it, what Pixar (Finding Nemo, Up, Ratatouille, The Incredibles), Dreamworks (Kung Fu Panda and all the Shreks), and Disney (The Lion King) are doing is not as much original content standing on its own as a series of good but fairly formulaic animations - Pixar could as well call Ratatouille "Pixar 8: Ratatouille" and people would come for name recognition (have you ever seen a Pixar movie in which the protagonist was not an adolescent male?), and Dreamworks, well...

Yes, most movies on the list are pretty good, but that's not even strictly necessary - look at #11 for evolution's sake! And most often sequels which are demonstrably worse than originals earn more money anyway, and it's not just due to inflation. Just looking at the list - Matrix, Star Wars, Madagascar, Shrek, Pirates of the Caribbean all went downhill in quality and skywards in revenue.

I don't know how to easily measure relative contributions of genuine quality (well, I could perhaps rank-correlate imdb scores with some Bayesian filtering for rating uncertainty, maybe some other time...) and name recognition - but the latter factor is just huge.

You thought movies are bad?

That's how bad situation with movies is, and movies are the easiest case. Yes, you only know if a movie is good or not after you see it - but movies are all the same format (not counting 3D), similar length, usually in a small number of easily classifiable genres - so it's not that hard. Within a single genre you can "almost objectively" say that The Dark Knight was better than Spider-Man 2, or something like that.

Yes, there will be individual differences, but it's a simple problem of low dimensionality - completely non-personalized imdb score is a pretty good predictor of how much you'll like a movie. Add or subtract a few points depending on what genre you like, and other trivial criteria, and it gets eerily accurate. And if that's not enough

Netflix Prize shows that if you rank just a handful of movies, it will be enough to predict if you like any movie ever made very very accurately.

How about books?

So movies are solved, at least in theory. How about books? It seems that

the bestsellers are the Bible, and list of quotations by Chairman Mao. Followed by more religious, books, more Communist propaganda, a dictionary of Chinese... All right, that list makes no sense whatsoever, let's forget about it. And we all know people buy books mostly based on series and author name recognition, J. K. Rowling and Stephanie Meyer can both tell you that.

But even though books with books, Amazon can be quite decent in its recommendations. Unfortunately its algorithm seems to work only with books. Here what it does with movies (recommendations for New Hope):

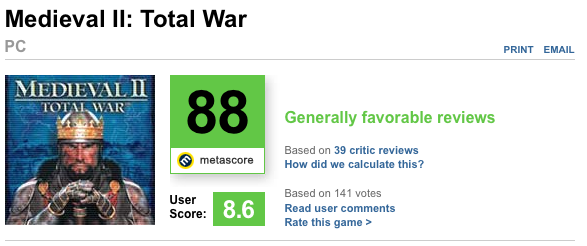

And with games (recommendations for Medieval 2 Total War):

How useless is that?

Back to video games

Game theory time. What are the choices game developer and publishers face?

- Make genuinely good games - expensive, risky, if not flashy enough customers often won't even know these games exist

- Make games that make good first impression - often due to name recognition, and are really shitty once you get to play them for more than 15 minutes - cheap, easy, makes shitloads of money

Which one would gamers prefer to happen? Which one will actually happen? That's my point. Until we find a way to reliably tell which games are good before paying for them - most games will be flashy shit.

As I'm blogging about games sucking let me give you an example of particularly egregious abuse. First "Far Cry 2". It is just like Far Cry except it's developed by another company, has different genre, entirely unrelated world, not a single character or plot element in common, and basically there's nothing - absolutely nothing - linking Far Cry and Far Cry 2.

You know what it is? That's criminal fraud.

Publishers of Far Cry 2 should be prosecuted for fraud. Calling their game something it is not to deceive customers is exactly that. It's also widely accepted marketing practice, as if that made it any less criminal. The point of trademarks should be protecting customers from deception, not making trademark owners shitloads of money as it is now - and it was once like that - a century ago

Coca-Cola was once sued by American government because it changed its formula to no longer contain extracts of coca leaves or kola nuts. That was before corporate interests took over all our governments. Now trademarks no longer serve customers - they serve corporations exclusively.

So how can a gamer find out if a game is any good or not? First, the problem is extremely difficult compared to one with movies. Games offer very wide variety of different experiences and it's really difficult to compare different games. Someone might like mercenary-shooting missions in the original Far Cry, but hate Trigen missions with passion. By someone I mean virtually every single games - but if different parts of the same can evoke such reactions, how can you give such game a single score?

Or even worse, people might absolutely love the game, except for a huge number of severe bugs which make it virtually unplayable. Like every single Total War game ever released. You know,

it's been 3 years and they still haven't fixed those few semicolons in Medieval 2 Total War which completely break diplomacy system.

You rarely have such varied reactions to a single movie (shitty ending appended to the

Shindler's List for no reason notwithstanding). Maybe with so-bad-it's good genre, but it's an entirely different matter.

Example of a movie 10.6% consider so-bad-it's-good, most people think it's plain bad

So how can you find out if a game is good or not? Clearly you cannot rely on Amazon, and game reviewers are some of the most incompetent and corrupt group of journalist ever. I wonder why Fox News didn't start a game review channel - they would all feel just at home there. Not that it's much different from other journalist who need pre-release access to products provided by publishers to stay in business. Publishers have them by the balls.

Once upon a time there was a tradition of game demos and "shareware" - you could give a game a try, and if you liked it you could purchase full version. This tradition almost died, partially because most genres don't really work well in demo format, and partially because it's so easy to get full version to try.

So as a gamer you have the following three options to get 1 good game:

- Pay for games that make good first impression, most of which will be shit. You pay for 1 good game and 9 shitty games.

- Bittorrent games without paying, test which ones you like, then buy games you like. You pay for 1 good game and delete 9 shitty games after quick testing.

- Bittorrent games without paying, never pay.

Now in the perfect world everyone would follow #2 option - publishers would earn money for good games, and would earn nothing on shitty games like Far Cry 2. There's just one problem here - once you've found your game, you really have no interest in paying for it. Whatever little stigma or risk is attached to piracy, it is the same in both option #2 and option #3. And publishers try hard (and fail hard) to make #3 difficult, and to extent they're successful make #2 equally difficult in process.

As the result almost everyone does #1 or #3. #1 creates incentive for production of shitty games. #3 creates no incentive whatsoever - and so games stay shitty, gamers stay unhappy, and everyone blames piracy.

Getting rid of piracy would not solve the problem at all - just look at Playstation 3. Everyone would have to do #1 - pay loads of money for shitty games, resulting in high prices, and shitty games. Nobody would be happy.

The only way #2 could happen in the short term would be some sort of honor system - but it's hard to create a honor system if most publishers are assholes who treat gamers like shit (starting from unskippable ads every time you start a game and obnoxious DRMs), developers get only 16% of what customers pay, and people who do #2 are treated just as those who don't pay for anything. Not to mention option #2 being technically illegal. Honor systems can be extremely powerful, but they just won't happen in an environment like that.

What else could happen? Well, someone might figure out ways of figuring out if game is any good other than bittorrenting it. Remember - once incentive for creating shitty games disappears piracy won't be necessary. That doesn't sound terribly likely due to difficulty of the problem. And even if such system existed, it would have to be used by most gamer, and it would have to be difficult to abuse by publishers. Remember - we already have systems which can tell you if you'll like a movie or not with very high accuracy - and yet most people don't use them, going for name recognition instead - resulting in endless stream of shitty sequels.

Or we could use something like

flattr. You'd pay some sum every month for playing any video games you want - and after end of the month your money would be distributed to developers of games you played most (or marked as ones you liked most). This would be almost as good as option #2 above, and probably the most realistic way to solve it for good. Of course publishers won't go for it easily, as they'd lose control over market, all the powers going to developers and gamers.

Until that happens, long live Pirate Bay!